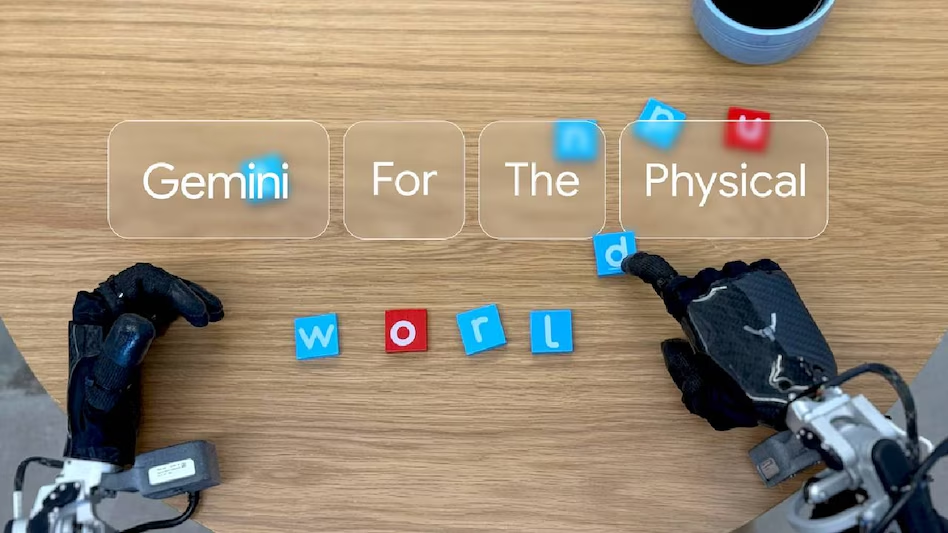

Google DeepMind has announced the launch of two groundbreaking AI models, Gemini Robotics and Gemini Robotics-ER, aimed at significantly enhancing robotic intelligence and dexterity. These innovations build upon the foundation of Gemini 2.0, Google’s latest multimodal AI model, marking a major step forward in bridging the gap between robotic perception and physical action.

According to Carolina Parada, Senior Director and Head of Robotics at Google DeepMind, these advancements drive improvements in three key areas: generality, interactivity, and dexterity. “We’re drastically increasing performance in these areas with a single model,” Parada stated. “This enables us to build robots that are more capable, more responsive, and more robust to changes in their environment.”

Introducing Gemini Robotics

Gemini Robotics is a vision-language-action model that allows robots to dynamically process and act upon new situations, even those for which they have not been explicitly trained. This model empowers robots to:

Understand and adapt to new environments without prior training.

Interact naturally and responsively with humans and their surroundings.

Perform precise physical tasks, such as folding paper or removing a bottle cap, significantly improving robotic dexterity.

By enhancing adaptability and physical interaction, Gemini Robotics represents a major leap forward in the development of general-purpose autonomous robots capable of real-world applications.

Advancing Embodied Reasoning with Gemini Robotics-ER

In addition to Gemini Robotics, DeepMind introduced Gemini Robotics-ER (Embodied Reasoning), an advanced vision-language model designed to help robots better understand and interact with complex environments. As Parada explained, “For example, if you’re packing a lunchbox, you need to know where everything is, how to open the lunchbox, how to grasp items, and where to place them. That’s the kind of reasoning Gemini Robotics-ER enables.”

This model allows roboticists to integrate AI-driven reasoning with existing low-level controllers, making it easier to develop new robotic capabilities. The incorporation of embodied reasoning enhances problem-solving abilities, enabling robots to make intelligent decisions in real-time.

Safety and Responsible AI Deployment

With the growing autonomy of robotic systems, DeepMind emphasizes safety as a top priority. Vikas Sindhwani, a researcher at Google DeepMind, highlighted the company’s layered safety approach, ensuring responsible AI deployment. “Gemini Robotics-ER models are trained to evaluate whether or not a potential action is safe to perform in a given scenario,” Sindhwani explained.

DeepMind is also releasing new benchmarks and frameworks to advance AI safety research. This builds on the foundation of Google DeepMind’s “Robot Constitution,” a set of AI safety rules inspired by Isaac Asimov’s laws of robotics.

Collaborations with Leading Robotics Firms

To drive the next generation of humanoid robots, Google DeepMind is collaborating with top robotics firms, including Apptronik, Agile Robots, Agility Robotics, Boston Dynamics, and Enchanted Tools. These partnerships aim to integrate AI-driven intelligence into various robotic embodiments and applications.

“We’re very focused on building intelligence that understands the physical world and acts upon it,” Parada stated. “We’re excited to leverage these models across multiple embodiments and applications.”

With the introduction of Gemini Robotics and Gemini Robotics-ER, Google DeepMind continues to push the boundaries of robotic intelligence, paving the way for more capable, responsive, and autonomous robotic systems in real-world environments.

Recent Random Post: